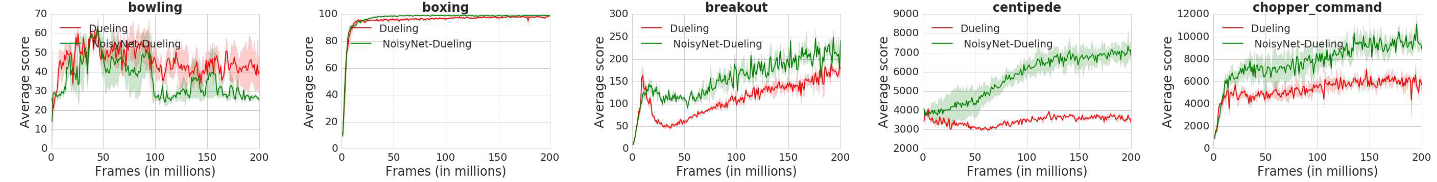

Dive into the cutting-edge realm of deep reinforcement learning with the groundbreaking paper, “Noisy Networks for Exploration” (2018) by Meire Fortunato et al. This revolutionary work introduces NoisyNet, a dynamic reinforcement learning agent that injects parametric noise into its weights, transforming exploration strategies. Through the ingenious use of stochasticity in the agent’s policy, NoisyNet achieves unprecedented efficiency in exploration. The beauty of this approach lies in its simplicity of implementation and minimal computational burden. By shattering conventional exploration heuristics in A3C, DQN, and dueling agents, NoisyNet propels performance to extraordinary heights across a spectrum of Atari games. Brace yourself for a paradigm shift as this innovative methodology catapults agents from sub to super-human prowess, ushering in a new era of reinforcement learning excellence.

Link to the paper: https://arxiv.org/abs/1706.10295